5 Ways AR, VR, and AI are Saving Humanity

FutureXLive was a one day conference created by Moxie to explore the possibilities of emerging technologies in the fields of Virtual Reality (VR), Augmented Reality (AR), Mixed Reality (MR), Artificial Intelligence (AI) and Brain-Machine interfaces. Thank you Dan Smigrod for the opportunity to attend as your guest on October 5, 2017. This inaugural conference featured an all-star lineup of international and local speakers including:

- Dr. Michio Kaku, author of #1 New York Times Best Seller, The Future of the Mind

- Dr. Helen Papagiannis, leading expert in the field of Augmented Reality (AR)

- David Putrino, Director Of Rehabilitation Innovation at Icahn School of Medicine at Mount Sinai

Local speakers included:

- Annie Eaton, CEO, Futurus

- Dave Beck, Managing Partner, Foundry45

- John Buzzell, President, You Are Here

- Dr. Grace Ahn, Associate Professor, UGA

- Janet H. Murray, PhD, Associate Dean at Ivan Allen College, Georgia Tech

- Dr. Adriane B. Randolph, professor and founder of the BrainLab. Her research focuses on brain-computer interface systems.

5 Ways AR, VR, and AI are saving humanity

1. Diagnose Concussions on the Field

There are over 10 million concussions every year, most undiagnosed. Recently, scientists have discovered a biomarker for concussions, but what does it mean on the field? Now physical trainers and coaches can use a VR headset developed specifically for testing athletes for concussions.

2. VR and AR will help the deaf hear, the blind see, cure Parkinson’s, and manage pain.

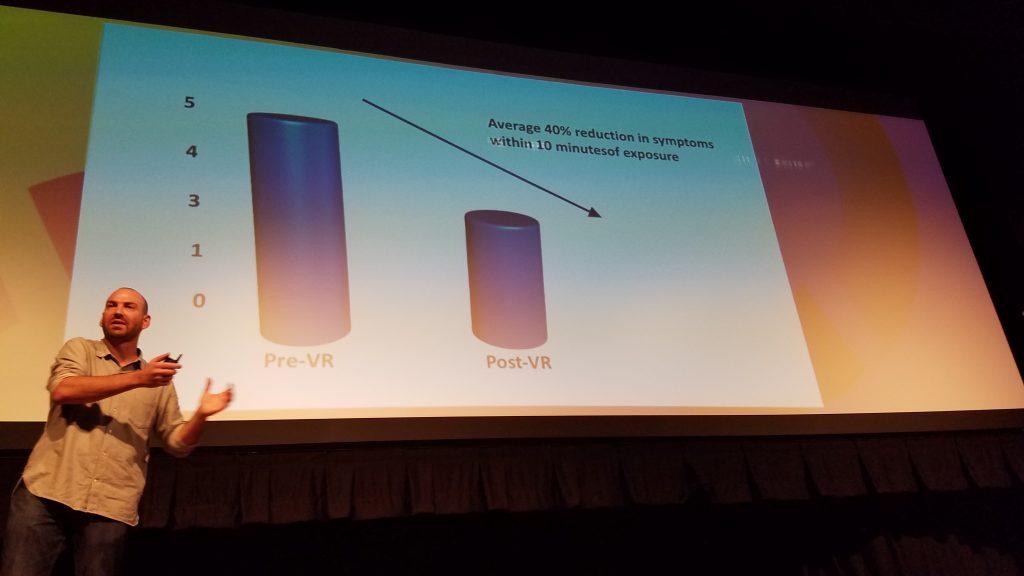

David Putrino, Director of Rehabilitation Innovation at Icahn School of Medicine at Mount Sinai, presented a study on helping the deaf experience music through vibrations, but what was truly amazing was that same technology was used to help a musician with Parkinson’s play the piano for the first time in a decade. His research showed a 40% average reduction in neuropathic pain symptoms with 10 minutes of VR therapy.

If you have trouble reading menus or cereal boxes in the grocery store, how about AR glasses that reads labels for you? Dr. Helen Papagiannis presented OrCam glasses that read out loud.

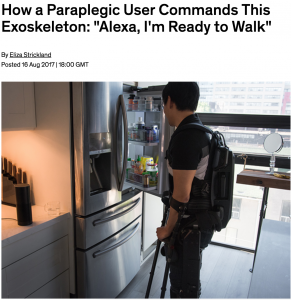

3. David Putrino would also like to put an Amazon Alexa into every home of people suffering from spinal cord injury.

(Amazon would like every household to purchase at least one Echo.) The initial reason is obvious, help patients increase their independence with voice command smart home automation (controlling lights and thermostats). In my research, I found that Alexa is also being used to help paraplegics command their robotic limbs.

4. VR and AR will make us more empathetic.

We’re all familiar with the expression, “put yourself in someone’s shoes,” is to understand their point of view. Dr. Grace Ahn presented her research in “Perspective Taking” that showed an increase in empathy after a VR experience. Dr. Ahn’s test subjects became VR cows and experienced going to the slaughterhouse.

5. We will be able to smell and taste Augmented Reality

Dr. Helen Papagiannis is engaging all 5 senses in her AR experiences. We have experienced sight, sound, and touch through haptic feedback (i.e. the vibrations from our smartphone), but what about smell and taste?

Cutting edge AR experiences are now including smell and even taste. Dr. Papagiannis presented the AR cookie, an augmented reality device that provides taste sensations. I would equate this to tasting a cookie without any calories. The AR cookie might not save humanity, but could help someone with severe allergies experience food.

I could have written 5 more articles about FutureXLive discussions on how AR, VR, MR, and Brain-Computer interfaces are changing the world, practical applications with real ROI value, or Dr. Michio Kaku‘s predictions on the future of humanity. If you’d like to learn more, feel free to contact me, or signup to attend next year’s Moxie conference.

But wait, there’s more! In the video below, I’m repairing a pump at Foundry45‘s VR Demo. Video Credit: Justin Blake